# Data Stores

Data stores are Pipedream's built-in key-value store.

Data stores are useful for:

- Storing and retrieving data at a specific key

- Counting or summing values over time

- Retrieving JSON-serializable data across workflow executions

- Caching

- And any other case where you'd use a key-value store

You can connect to the same data store across workflows, so they're also great for sharing state across different services.

You can use pre-built, no-code actions to store, update, and clear data, or interact with data stores programmatically in Node.js or Python.

# Using pre-built Data Store actions

Pipedream provides several pre-built actions to set, get, delete, and perform other operations with data stores.

# Inserting data

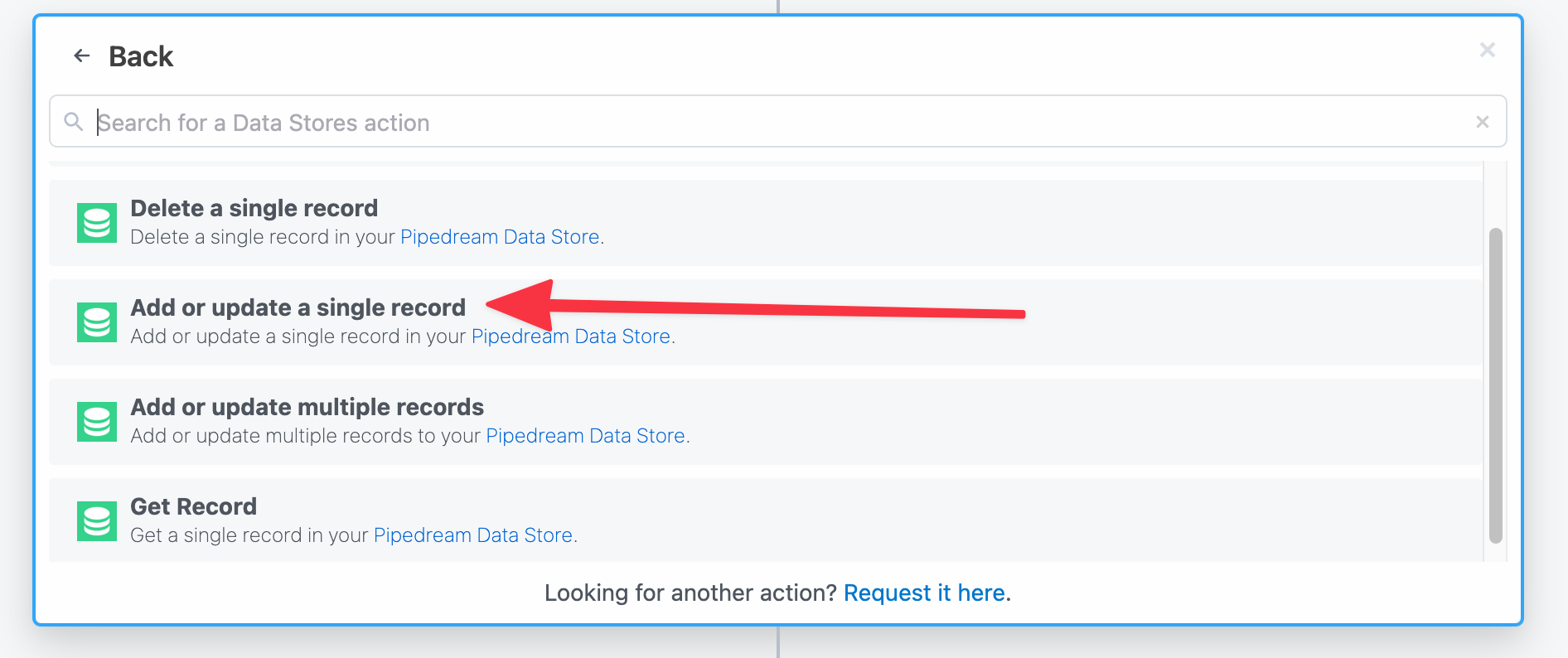

To insert data into a data store:

- Add a new step to your workflow.

- Search for the Data Stores app and select it.

- Select the Add or update a single record pre-built action.

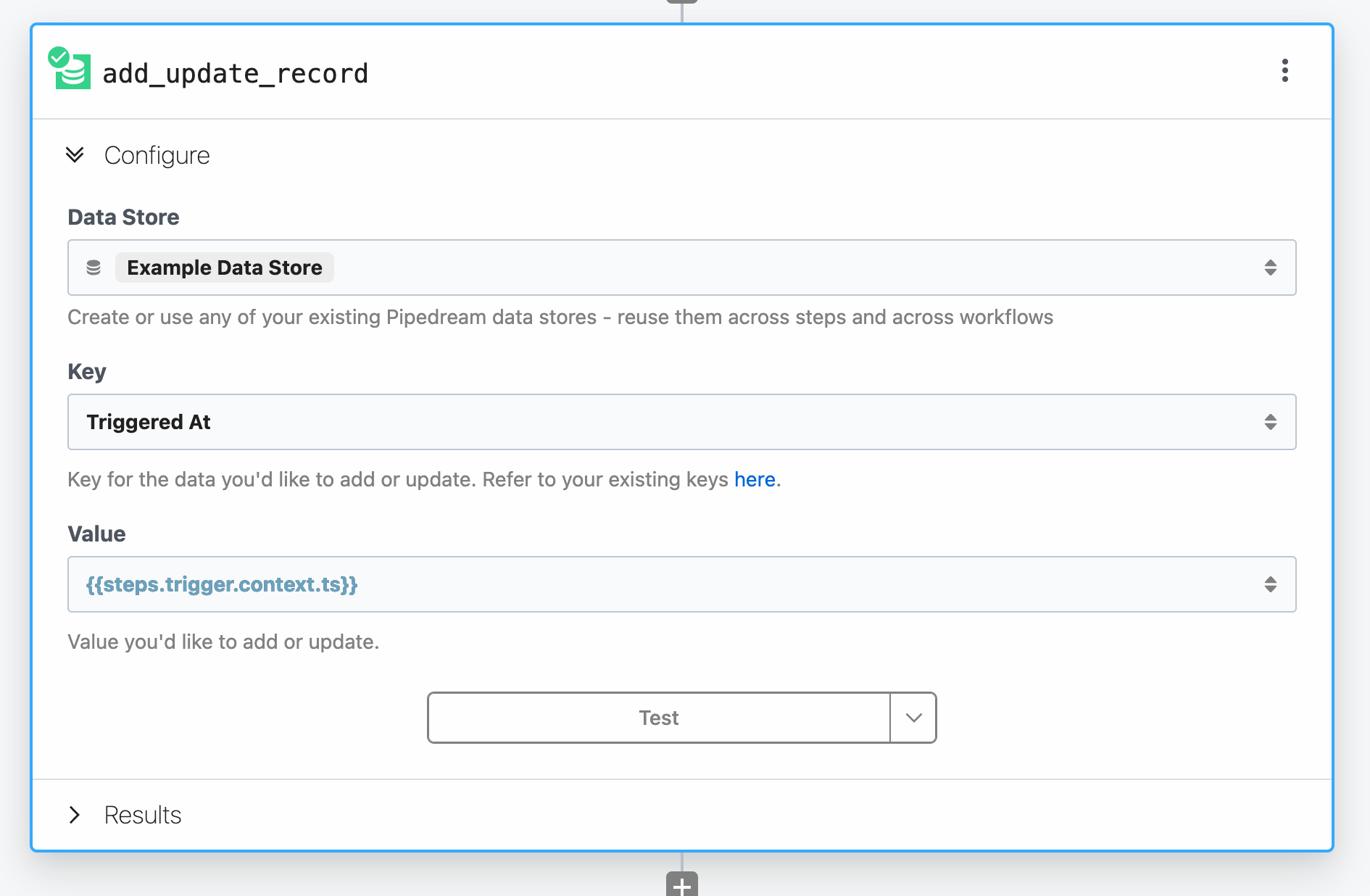

Configure the action:

- Select or create a Data Store — create a new data store or choose an existing data store.

- Key - the unique ID for this data that you'll use for lookup later

- Value - The data to store at the specified

key

For example, to store the timestamp when the workflow was initially triggered, set the Key to Triggered At and the Value to {{steps.trigger.context.ts}}.

The Key must evaluate to a string. You can pass a static string, reference exports from a previous step, or use any valid expression.

TIP

Need to store multiple records in one action? Use the Add or update multiple records action instead.

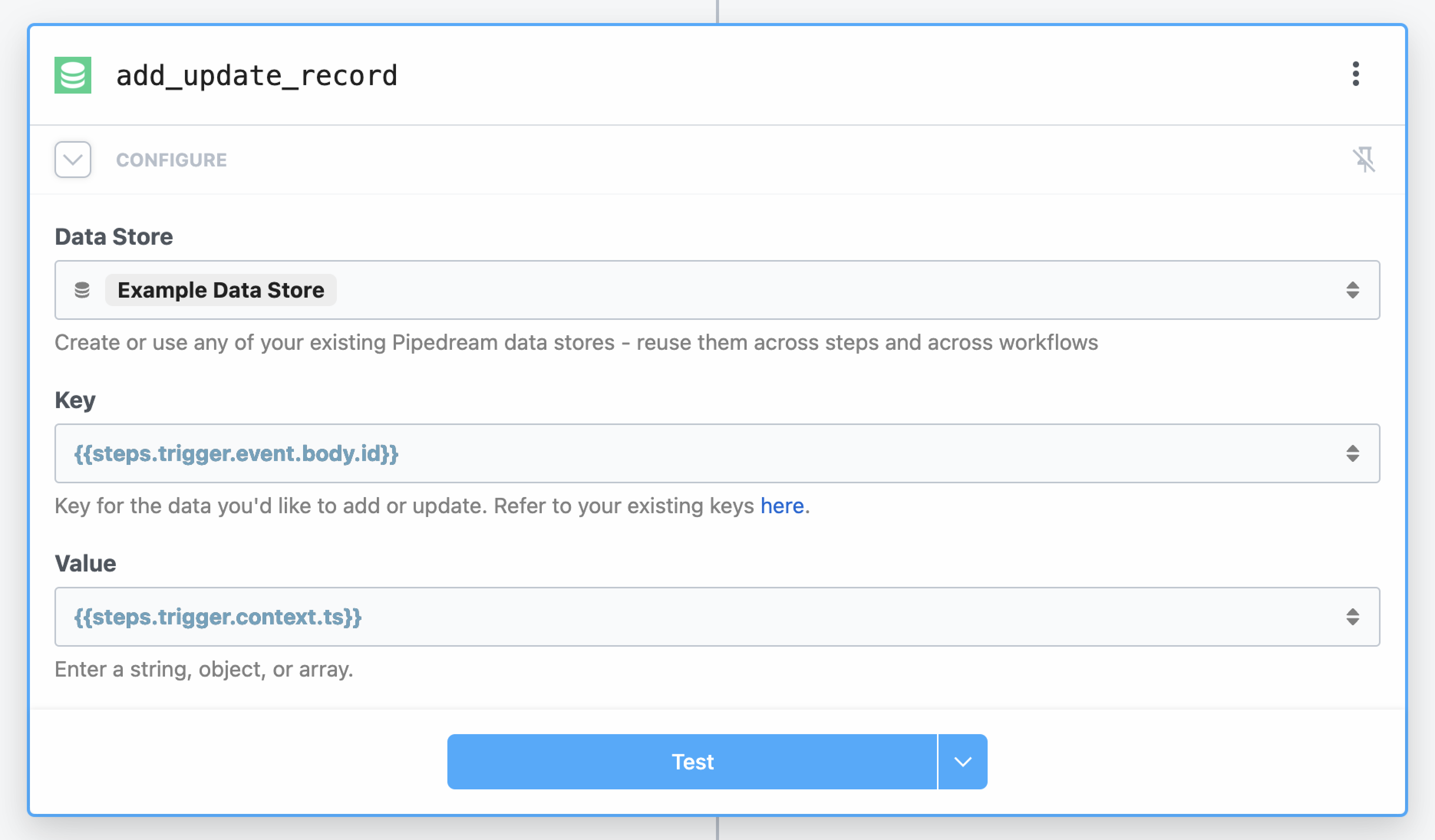

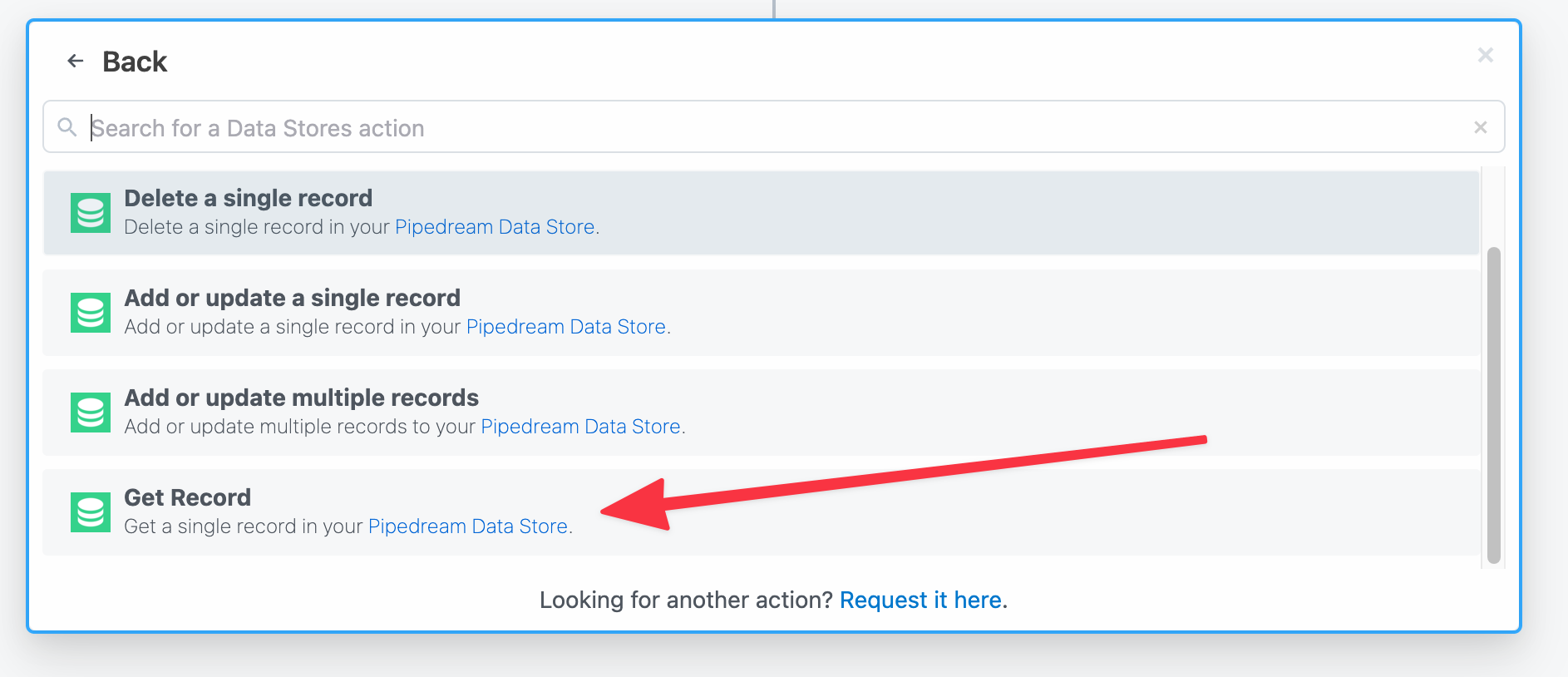

# Retrieving Data

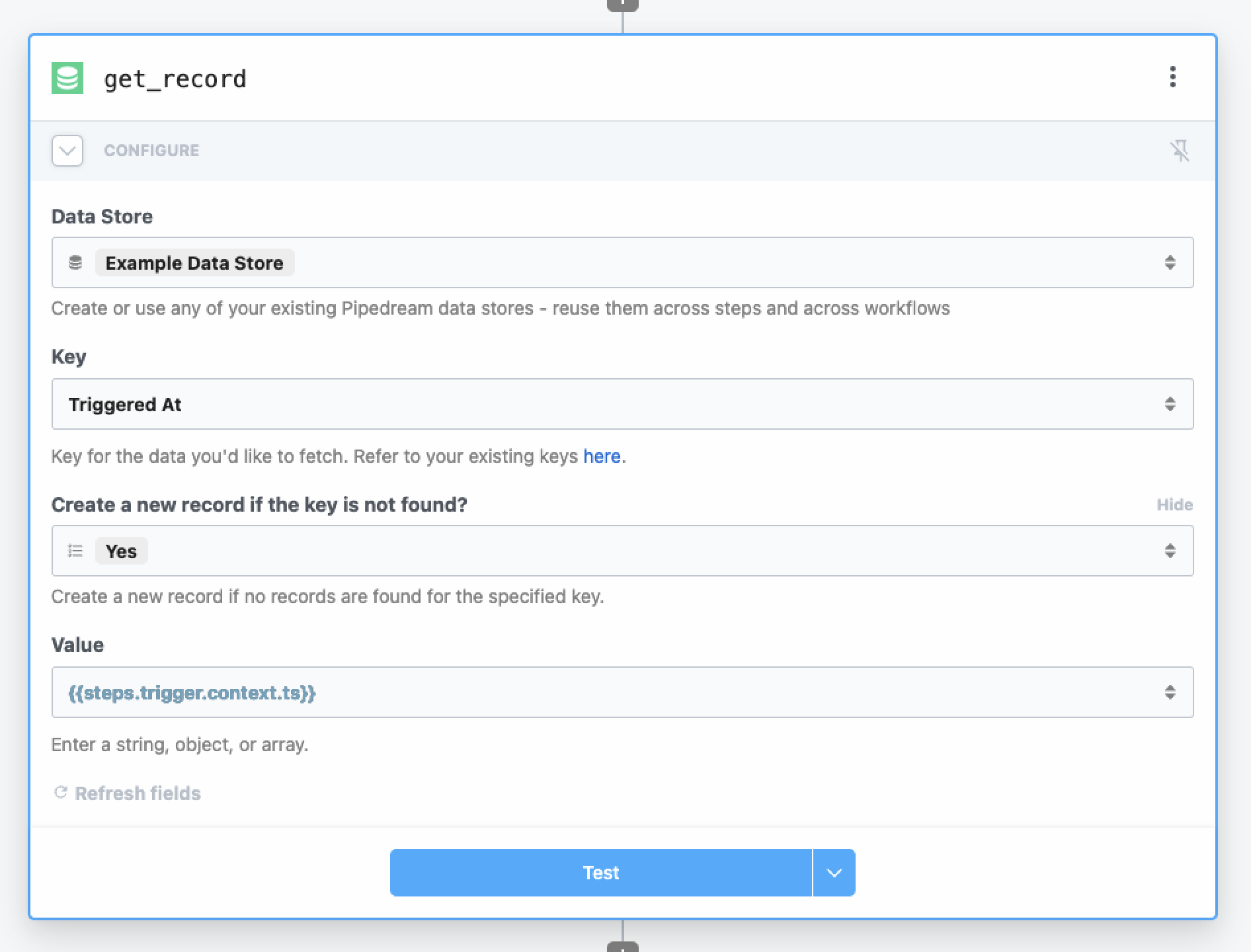

The Get record action will retrieve the latest value of a data point in one of your data stores.

- Add a new step to your workflow.

- Search for the Data Stores app and select it.

- Select the Add or update a single record pre-built action.

Configure the action:

- Select or create a Data Store — create a new data store or choose an existing data store.

- Key - the unique ID for this data that you'll use for lookup later

- Create new record if key is not found - if the specified key isn't found, you can create a new record

- Value - The data to store at the specified

key

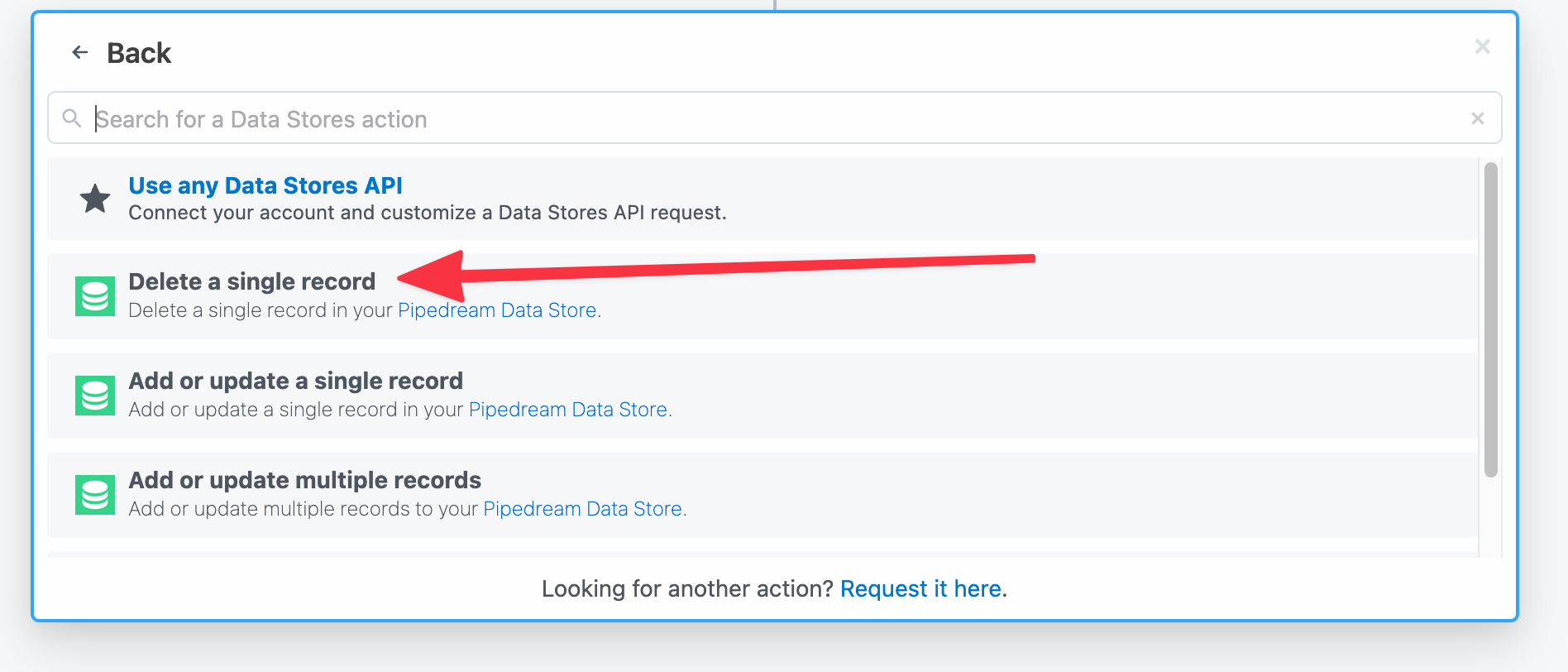

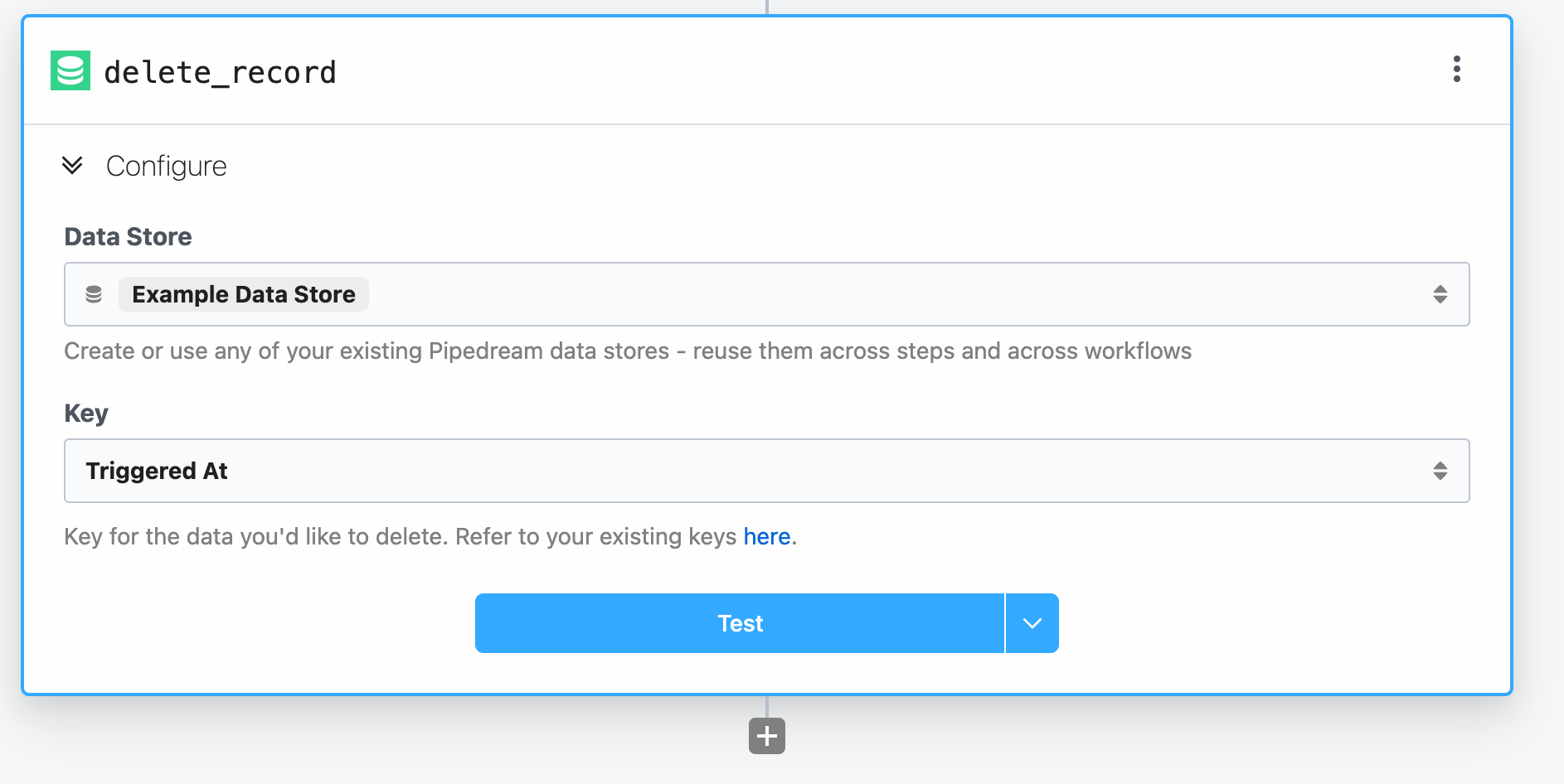

# Deleting Data

To delete a single record from your data store, use the Delete a single record action in a step:

Then configure the action:

- Select a Data Store - select the data store that contains the record to be deleted

- Key - the key that identifies the individual record

For example, you can delete the data at the Triggered At key that we've created in the steps above:

Deleting a record does not delete the entire data store. To delete an entire data store, use the Pipedream Data Stores Dashboard.

# Managing data stores

You can view the contents of your data stores at any time in the Pipedream Data Stores dashboard (opens new window). You can also add, edit, or delete data store records manually from this view.

# Editing data store values manually

- Select the data store

- Click the pencil icon on the far right of the record you want to edit. This will open a text box that will allow you to edit the contents of the value. When you're finished with your edits, save by clicking the checkmark icon.

# Deleting data stores

You can delete a data store from this dashboard as well. On the far right in the data store row, click the trash can icon.

Deleting a data store is irreversible.

WARNING

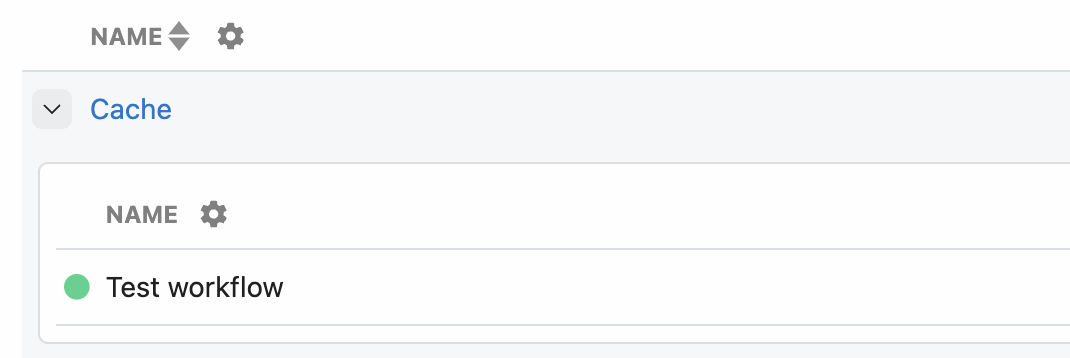

If the Delete option is greyed out and unclickable, you have workflows using the data store in a step. Click the > to the left of the data store's name to expand the linked workflows.

Then remove the data store from any linked steps.

# Using data stores in code steps

Refer to the Node.js and Python data store docs to learn how to use data stores in code steps. You can get, set, delete and perform any other data store operations in code. You cannot use data stores in Bash or Go code steps.

# Compression

Data saved in data stores is Brotli-compressed (opens new window), minimizing storage. The total compression ratio depends on the data being compressed. To test this on your own data, run it through a package that supports Brotli compression and measure the size of the data before and after.

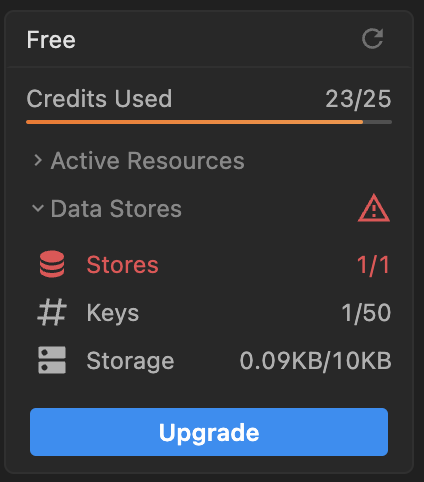

# Data store limits

Depending on your plan, Pipedream sets limits on:

- The total number of data stores

- The total number of keys across all data stores

- The total storage used across all data stores, after compression

You'll find your workspace's limits in the Data Stores section of usage dashboard in the bottom-left of https://pipedream.com (opens new window).

# Supported data types

Data stores can hold any JSON-serializable data within the storage limits. This includes data types including:

- Strings

- Objects

- Arrays

- Dates

- Integers

- Floats

But you cannot serialize functions, classes, sets, maps, or other complex objects.

# Retrieving a large number of keys

You can retrieve up to 1,024 keys from a data store in a single query.

If you're using a pre-built action or code to retrieve all records or keys, and your data store contains more than 1,024 records, you'll receive a 426 error.

# Exporting data to an external service

In order to stay within the data store limits, you may need to export the data in your data store to an external service.

The following Node.js example action will export the data in chunks via an HTTP POST request. You may need to adapt the code to your needs. Click on this link (opens new window) to create a copy of the workflow in your workspace.

TIP

If the data contained in each key is large, consider lowering the number of chunkSize.

Adjust your workflow memory and timeout settings according to the size of the data in your data store. Set the memory at 512 MB and timeout to 60 seconds and adjust higher if needed.

Monitor the exports of this step after each execution for any potential errors preventing a full export. Run the step as many times as needed until all your data is exported.

WARNING

This action deletes the keys that were successfully exported. It is advisable to first run a test without deleting the keys. In case of any unforeseen errors, your data will still be safe.

import { axios } from "@pipedream/platform"

export default defineComponent({

props: {

dataStore: {

type: "data_store",

},

chunkSize: {

type: "integer",

label: "Chunk Size",

description: "The number of items to export in one request",

default: 100,

},

shouldDeleteKeys: {

type: "boolean",

label: "Delete keys after export",

description: "Whether the data store keys will be deleted after export",

default: true,

}

},

methods: {

async *chunkAsyncIterator(asyncIterator, chunkSize) {

let chunk = []

for await (const item of asyncIterator) {

chunk.push(item)

if (chunk.length === chunkSize) {

yield chunk

chunk = []

}

}

if (chunk.length > 0) {

yield chunk

}

},

},

async run({ steps, $ }) {

const iterator = this.chunkAsyncIterator(this.dataStore, this.chunkSize)

for await (const chunk of iterator) {

try {

// export data to external service

await axios($, {

url: "https://external_service.com",

method: "POST",

data: chunk,

// may need to add authentication

})

// delete exported keys and values

if (this.shouldDeleteKeys) {

await Promise.all(chunk.map(([key]) => this.dataStore.delete(key)))

}

console.log(`number of remaining keys: ${(await this.dataStore.keys()).length}`)

} catch (e) {

// an error occurred, don't delete keys

console.log(`error exporting data: ${e}`)

}

}

},

})